In the last fifteen years, many businesses – from newly-founded startups, over mid-sized companies to huge Fortune-500s – have flocked to the cloud.

Driven by promises of cost-optimization, flexibility and innovation they started moving many of their on-premises workloads to the data centers of the big cloud players.

And yet, more and more stories are emerging from companies who say the results didn’t meet their expectations:

- 37Signals, the company behind some SaaS-offerings like Basecamp and HEY, moved out of the cloud, reporting that it was too expensive for their workload type.

- Big insurance company Geico moves many workloads back to on-premises.

- This kicked off a bigger debate over cloud repatriation, with some reports that many CIOs are planning to repatriate workloads.

So what’s really going on? Why do some migrations struggle while others thrive?

In this article, we’ll explore the real-world trade-offs of cloud adoption and how combining cloud and on-prem in new ways might make better use of both.

To understand what makes a cloud migration so difficult, let’s introduce the fictional company ACOE – A Corporation that Operates Everything. ACOE is a successful, mid-sized corporation with a reasonably sized own IT department (> 500 people) that operates its own data centers.

ACOE’s executives have recently decided to move towards the cloud. Let’s put ourselves in their shoes and follow their journey.

The Land Before Cloud Times

Before we can migrate, we need to understand what our on-premises data centers actually look like. The following diagram shows a simplified view of a ACOE’s data center with its many specialized systems, teams and technologies working in different corners:

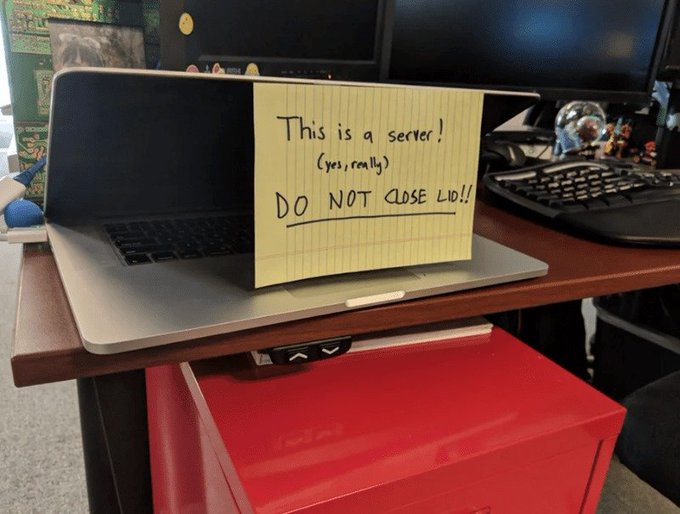

The picture is greatly simplified: We can be sure there are many more systems in place. Somewhere in the dark corners below an abandoned desk runs a server that nobody knows what it does, but everyone is scared to shut off:

An important server. Source: Reddit

The key takeaway: like most established companies, ACOE’s data center has evolved into a complex jungle of interdependent systems and processes, tended by a team of specialists who keep everything running.

The Journey to the Cloud

So if want to move to the Cloud, what parts of this behemoth do we touch first?

Step 1: Go SaaS-first

Many companies start by moving standardized software to SaaS offerings:

- Adopt Microsoft 365 to replace AD, Exchange, and SharePoint servers

- Move the ERP to its vendor’s cloud version (if it’s standardized enough)

- Use public GitHub/GitLab instead of private installations

- Consume Atlassian’s SaaS for JIRA/Confluence (until you discover half your data center has deep custom integrations into JIRA and you need to unwind them first)

This makes a lot of sense: we don’t gain anything by running those standardized products ourselves (and many of these SaaS-vendor pressure us into their cloud offerings anyway), so let’s migrate to the SaaS-offerings.

The picture looks a bit different now:

Step 2: Enable our Developers

Next, we want to give our developers a modern environment to build software.

After all, aren’t they the people that build the software that makes our company different? Our competitive advantage?

We decide to replace the “Internal Application Platform” box with a new platform in the cloud.

Step 2a: Move to which platform?

Kubernetes has clearly won the container wars, so that’s what most companies are standardizing on. We’ll use it as our target platform as well.

Fortunately, all the big cloud providers (hyperscalers) now offer managed Kubernetes services, so we pick one of them (or, if the decision-maker in our company plays golf with their account rep, that decision might already be made for us️).

With our shiny new cloud tenant set up, we’re ready to deploy Kubernetes!

Well – almost. There are a few things we need to solve first:

Step 2b: Landing Zones & Networking

Every big cloud provider has the notion of a landing zone: a set of initial objects and practices (covering IAM, multi-tenancy, billing, security, connectivity) to adopt the cloud as an enterprise.

The solution architects of the cloud provider will help us set those up, but we will need some employees to build up this knowledge for running it later – either by reskilling/upskilling existing employees or hiring our first Cloud Architects/Engineers.

The process of setting up a landing zone usually comes with establishing network connectivity to the on-premises data center. Thus, we need to involve our network specialists. And since there will be a firewall shielding the cloud from on-prem and vice-versa, we also have to onboard our firewall team to the cloud.

Our new cloud tenant now looks like this:

Step 2c: Kubernetes & Persistence

Finally, we’re ready to deploy Kubernetes to our tenant!

Since the applications also need a way to persist data, we add a managed RDBMS offering from the cloud provider and an object store to the mix:

This is quite a lot of new technology with their own complexities. In order to not overload each developer team with the burden of managing them, we establish platform teams: specialists in the respective areas (Kubernetes, DBs, …) that build self-service platforms where the developers can build upon.

Great! We’ve built a modern cloud-native development platform in the public cloud:

Our developers are very happy: they can ship features in a self-service approach using modern tools. And since the developers are able to iterate more quickly, the business is happy too, as they can experiment and deliver faster.

Revisiting the Big Picture

We’ve done it: some boxes are now running in the cloud, our developers have a shiny new platform, and the business is happy with the faster pace.

Before charging ahead, though, let’s zoom out and look at our IT landscape as a whole:

At first glance, this seems like progress.

But when we look closer, some challenges become clear:

-

Two separate worlds

We’ve created two isolated islands: the on-premises data center and the new cloud environment, each with its own technologies, processes, and people. In effect, we’ve doubled our complexity. This only works if we can either fully shut down one island or start sharing tools, processes, and people between them. -

Hard to shut down the old island

Fully turning off the on-premises data center isn’t easy:- Some workloads are too tightly coupled or outdated to migrate (the Mainframe!)

- Some critical data may not be legally allowed to leave our premises.

- Regulators (like Switzerland’s FINMA) now demand that companies avoid putting all their eggs in one cloud provider’s basket, forcing either multi-cloud or on-prem copies of data. In short: we can’t get rid of any islands.

-

Higher costs for static workloads

Cloud shines for bursty or variable workloads. But for steady, always-on enterprise systems, the cloud often costs more than on-prem infrastructure. This is what many call paying the cloud premium and what drove 37signals out of the cloud. -

Lock-in and egress issues

Even if costs or complexity become too high, moving back isn’t simple. Cloud providers make it deliberately hard to move data out, which creates another form of vendor lock-in.

This is the point where many companies struggle: running two worlds at once, without a clear path to unite or retire either one.

Has the Cloud Failed Us?

Looking at these challenges, it’s fair to ask: Was the cloud migration worth it? Should we push ahead? Or pause and reconsider?

Before we decide, let’s acknowledge what has worked well:

-

SaaS for standard software makes sense

Moving standardized products to SaaS reduced our on-prem workload. Nobody is sad we don’t have to run Sharepoint ourselves anymore. We benefit from vendors running their own software and delivering updates rapidly. That’s worth keeping. -

Cloud-native tools and practices

The cloud’s APIs and automation tooling enable us to adopt modern approaches like self-service platforms, infrastructure-as-code, and SRE practices, making our teams faster and more efficient. -

Scalability on demand

Being able to spin up infrastructure instantly, without waiting for procurement, has enabled rapid experimentation and made handling peak loads far easier.

Quite some upsides! But the downsides are just as real.

So, should we go all-in on the cloud to maximize its benefits, or slow down to contain its risks?

Or – is there a third path? One that combines the best of both worlds?

Integrating the Two Islands: Kubernetes as the Common Fabric

While ACOE’s story might be fictional and every cloud journey looks slightly different, many companies eventually end up asking those questions. They want the speed and flexibility of the cloud, without giving up the control, cost-efficiency, and stability of on-premises.

We believe it’s possible to combine the best of both worlds by adopting Kubernetes as a common platform spanning both environments.

Using Kubernetes as the fabric between on-prem and cloud offers several advantages:

-

Shared tooling

Kubernetes provides powerful APIs and a rich ecosystem. The same tools (CI/CD, monitoring, security, policy) work no matter where your clusters run. -

Shared skills and processes

Standardizing on Kubernetes means your teams learn one platform instead of multiple cloud-specific stacks. This lowers the cognitive load and makes it easier to move people between environments. -

Reduced lock-in

By building platforms on Kubernetes instead of proprietary cloud services, you protect your investment. Workloads can move between providers (or back on-prem) without major rewrites. -

Cost flexibility

Portability gives you leverage:- Run stable, predictable workloads on cheaper on-prem infrastructure

- Use cloud only when you need extra capacity or specialized hardware

- If prices rise, you can shift workloads elsewhere

Instead of separate islands, Kubernetes can give us a single platform everywhere.

The Vision: The Cloud Native Data Center

You might say: “Kubernetes only runs applications! What about all the other boxes in our data center diagram?”

That’s a fair question. And this is where it gets interesting: Kubernetes is rapidly evolving beyond just stateless apps and can increasingly host many of the traditional data center workloads.

Some examples:

-

Networking and security

Kubernetes has always provided basic service connectivity, but modern CNIs like Cilium now add L7-aware policies, deep observability, and even service mesh capabilities. Features that once required expensive firewall or load balancer appliances. Reverse proxies, ingress gateways, and software load balancers are now common parts of the cluster itself. -

Databases and stateful systems

Thanks to operators, many databases now target Kubernetes as their primary deployment platform. Examples include CloudNativePG (PostgreSQL), MySQL, MongoDB, and Elasticsearch. Most offer commercial support for running on Kubernetes. -

AI/ML workloads

The AI ecosystem is embracing Kubernetes as well. NVIDIA highlights this trend as frameworks and GPU scheduling tools are becoming cloud-native. -

Virtual machines

Projects like KubeVirt aim to run VMs alongside containers in Kubernetes. While not yet at feature parity with established hypervisors, it’s quickly becoming viable for many VM workloads. -

ISVs and developer platforms

More and more software vendors are shipping their products as Kubernetes-native packages.- Monitoring, logging, tracing? Most modern solutions target Kubernetes first.

- Remote access or developer environments? Tools like Teleport and cloud development environments (CDEs) can run directly inside your cluster.

- Even traditional enterprise software is now often delivered as a Helm chart.

As the CNCF ecosystem keeps expanding, more and more of the old standalone infrastructure can be absorbed into the Kubernetes platform.

Eventually, our architecture could look something like this:

With each team and technology that aligns on Kubernetes, more benefits materialize: shared tools and skills, less lock-in, and less complexity overall.

Continue reading

This article is part of series where we cover how to assemble the building blocks of a Kubernetes-based enterprise data center: the Cloud Native Data Center.

Here’s what’s ahead:

- Part 1: Has the Cloud Delivered on Its Promise? (this post)

- Part 2: Why on-prem Kubernetes is hard

- Part 3: Choosing your on-prem Kubernetes stack

- Part 4: Taming the network jungle (coming soon)

- Part 5: Dude, where is my storage? (coming soon)

- Part 6: Bringing Data(bases) into Kubernetes (coming soon)

- Part 7: Running VM workloads on Kubernetes (coming soon)

Follow along as we explore how Kubernetes can run not just applications, but the entire data center.